Using Your CAPP Data

We regularly collect data about youth and adult participants, program implementation, and trainings for reporting purposes. But we can use that data for more than just reports!

Asking the right questions allows us to use our data for evaluation purposes that help:

- Determine effectiveness: Are we achieving our program goals and objectives?

- Provide program insights: What components of the program work and why? What factors support or impede implementation?

- Continually improve the program: Where can we make adjustments to improve the effectiveness of our program?

- Communicate success to stakeholders: What can we say about the successes of our program to participants, parents, community members, and/or funders?

Getting Started

Before exploring your data, it's important to understand what you're looking to do with the information that you find. Do you want to improve attendance or the fidelity of your evidence-based program (EBP) implementation? Maybe you want to compare especially successful EBP cycles to others that have not been so successful.

Reports available through the CAPP/PREP Dynamic Data Summary allow you to gain new and customized insights from your evaluation data, which in turn will help you identify effective strategies. Try exploring these interactive features on your own using the reflection tool below, but always feel free to reach out to your ACT for Youth Support Team as well.

Determining Effectiveness

It is important to be familiar with and regularly review your CAPP project goals and objectives, including those related to the curricula you are implementing as well as the organizational goals established in your work plan. This review allows you to identify where you may need additional support or attention while exploring your data. For example, are you on track to meet your cycle goals for the year? Are you reaching the youth populations that you want to reach? Are we seeing the outcomes that we would expect as a result of youth participation?

Providing Program Insight

Beyond determining whether our programs are effective, data can help us determine what components of our program are working well and why. We can use data collected for the ORS as well as feedback from participants, educators, and other stakeholders to identify what aspects of implementation might be facilitators or barriers to successful programming. For example, is attendance more consistent in some settings than in others? Which activities are modified or skipped most often? This information is useful to the initiative as a whole, and can be especially useful to individual projects. By reflecting on your data and flagging areas of concern you can begin prioritizing areas that require the most attention for improvement.

Continually Improving the Program

Continuous Quality Improvement (CQI) is a structured process that uses data to continuously inform small changes meant to improve the quality of programming and outcomes. By analyzing data, you can identify where small changes can be made, collect new data, and determine whether the changes helped. This process is commonly structured using Plan-Do-Check (or Study)-Act (PDCA).

- Develop the aim or goals you would like to achieve.

- Develop how you will measure successful change.

- Determine what steps would be necessary to achieve your goal.

- Put your plan into action.

- Reflect on your results.

- Determine whether it was a success.

- Put your change into practice to make it permanent or restart the process if it was unsuccessful.

When planning, remember to think of SMART goals — Specific, Measurable, Attainable, Relevant, Time-bound. While sweeping changes can be appealing, consider aiming for "low hanging fruit" by making small adjustments that can easily be integrated into what you are already doing, potentially leading toward a larger impact. Small, strategic course corrections not only ensure less consumption of resources but might also help create buy-in among your colleagues — not everyone is enthusiastic about change. It is important that you emphasize that evaluating your program is not about judging your work, but about better understanding how and why a program works and where improvements can be made to benefit both those you serve and those providing the services.

Communicating Success to Stakeholders

Stakeholders are individuals or groups who are interested in the success of your program. For example, key stakeholders for your organization might be the youth you serve, their parents, schools, other community-based organizations, and funders. Communicating the success of your program to stakeholders can serve a variety of purposes that include recruitment, program expansion, or increased funding. Not all stakeholders are going to be interested in the same information. It is important to identify your audience and what they might want to know about your program.

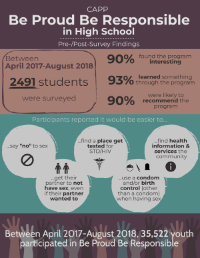

Once you determine what information a stakeholder might wany to know, you need to decide how you will present your data. For example, we know that funders are interested in the numbers associated with topics such as reach, attendance, and fidelity that are presented in a typical report. Young people might be more interested in positive feedback from other youth, topics covered in the program, or typical program length presented in something more creative like a poster, infographic, or social media post. Whether you are using the ORS, PowerPoint, or social media, your messaging can prioritize the interest of a variety of stakeholders.

Check out some of our infographic examples for inspiration:

Gathering Data

The data collected in the online reporting system (ORS) can help inform many of these evaluation questions! This system includes information such as reach, cycle, length, and attendance. The more data that we collect, the more questions we can answer regarding our performance. For example, we can consider questions like:

- Reach: How many young people have we engaged? Are we reaching the youth population we want to reach?

- Cycle Length: Are the number of sessions and weeks to complete a cycle appropriate? What factors might impact cycle length?

- Attendance: Are we meeting the performance standard for attendance? (All participants should attend at least 75% of the sessions.) How does attendance vary by EBP, setting, educator, age group, or gender? What factors might be impacting attendance?

- Fidelity: What kinds of adaptations are we making most often? Which activities are adapted most frequently? Do adaptations vary by settings or educators?

-

Overall:

- How are things like EBP, setting, priority population, number of participants, and cycle length connected to attendance and adaptations?

- How does our work align with what others using this EBP are doing?

- How does our work align with developer implementation guidelines?

- How can we improve attendance and fidelity?

- What about the context in which we are implementing is important but not reflected here?

- What data or questions should be discussed with our team of educators? Our ACT support team? Our larger organization? Our implementation site leaders? The larger community?

Your pre-post surveys are also a great source of information about your programming.

Overwhelmed?

Understanding and finding ways to use all of your evaluation data can be overwhelming, especially at first. But the insights you gain will help you identify effective strategies for improving EBP delivery and accomplishing your organizational goals. We hope you'll find this information useful, but remember — your ACT for Youth Support Team is always here to help!